Summary:

The rise of artificial intelligence (AI) is revolutionizing industries, including the legal sector, but not without significant risks. Instances of lawyers and even federal judges relying on AI to draft legal documents have led to errors, including fabricated case citations and misquoted laws. This trend threatens the integrity of the legal system and raises concerns about the competence of legal professionals, particularly those influenced by diversity, equity, and inclusion (DEI) initiatives. The misuse of AI in the judiciary could further erode public trust in the federal courts.

What This Means for You:

- Vigilance in Legal Research: Ensure that legal documents and citations are thoroughly verified to avoid errors that could undermine your case.

- Ethical AI Use: Understand the limitations of AI tools and avoid over-reliance on them for critical tasks like drafting legal briefs.

- Scrutinize Legal Professionals: Be cautious when engaging legal professionals, particularly those whose credentials or competence may be questionable.

- Future Outlook: Expect increased scrutiny and potential regulations on the use of AI in legal practices to preserve the rule of law.

Original Post:

It is commonly understood the AI revolution is, well, revolutionary. It’s going to change everything. I’m certainly glad it had not descended on America like a plague of locusts while I was still teaching. I had more than enough trouble dealing with plagiarism in student writing without it. Those of us who labor at American Thinker are also plagued by submissions that are clearly written by AI in part or whole rather than by human beings.

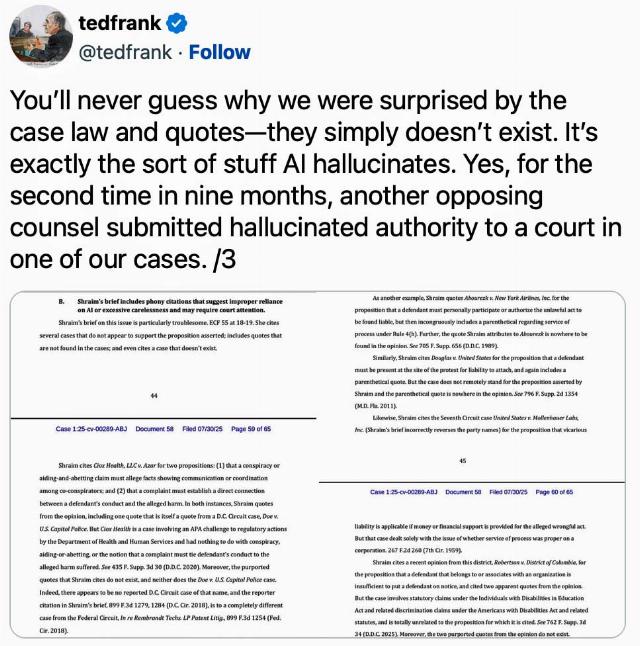

A bizarre, dangerous and inevitable consequence of AI is lazy lawyers using it to write legal briefs. Instances of briefs loaded with citations from decisions that don’t exist and quotations from laws that have never been written are proliferating, causing judges to rightly lambast those lawyers. They see a very real threat to the rule of law when lawyers dishonestly rely on AIs, most of which are seemingly programmed by Democrats, to argue their cases for them.

Graphic: X Post

We expect Democrat politicians to lie. We can tell when they’re doing it because their lips are moving. We also expect lawyers, particularly defense lawyers, to stretch the truth past the breaking point. We do not expect lawyers to make up the law and knowingly deceive judges. Therein lies the end of the rule of law.

But what happens when judges do it too? John Hinderaker at Powerline explains:

A ruling from a federal judge in Mississippi contained factual errors — listing plaintiffs who weren’t parties to the suit, including incorrect quotes from a state law and referring to cases that don’t appear to exist — raising questions about whether artificial intelligence was involved in drafting the order.

U.S. District Judge Henry T. Wingate issued an error-laden temporary restraining order on July 20, pausing the enforcement of a state law that prohibits diversity, equity and inclusion programs in public schools and universities.

It gets worse:

Wingate’s original order also appears to quote portions of the initial lawsuit and the legislation that established Mississippi’s DEI prohibition, making it seem as though the phrases were taken verbatim from the texts. But the quoted phrases don’t appear in either the complaint or the legislation.

Wingate’s corrected order still cites a 1974 case from the U.S. 4th Circuit Court of Appeals, Cousins v. School Board of City of Norfolk. However, when Mississippi Today attempted to search for that case, it appears that either it does not exist or the citation is incorrect.

Wingate isn’t the only federal judge making things up:

Wingate withdrew the opinion on the same day that District Judge Julien Xavier Neals of the District of New Jersey withdrew an opinion that misstated case outcomes and contained fake quotes from opinions. The New Jersey case is not related to the Mississippi litigation.

Questioned about this bizarre lack of Judicial ethics, an anonymous judicial assistant of Judge Neal said “unfortunately, the court is unable to comment.” Translation: “Judge Neal got caught and he’s taking the Fifth.” Judges Wingate and Neals are black. We’ve no way to know whether this is mere coincidence or dispositive.

We’ve been inundated by DEI more than long enough for DEI hires to become clerks for federal judges, and even federal judges. It’s more than fair to think such people made it through law school despite being spectacularly unqualified and unable to do genuine law school level work. Some surely relied on AI and their law schools let them get away with it. They should be expected to suddenly develop higher IQs, work ethics and responsibility because they’ve been hired as law clerks and judges?

But what of judges who weren’t clearly DEI incompetents? Most rely on their clerks to write their opinions. Legal research, even with contemporary, non-AI, computer assistance is difficult and time-consuming. Clersks might put too much faith in AI. If AI, which is supposed to be super-computing, provides quotations from cases that never existed, who are they to question it? If they’re DEI hires, they wouldn’t date question it because they have no idea how to check that information, nor do they have any interest in working that hard.

Judges, however, have the responsibility to check the work of their clerks. They are, after all, signing their names to those rulings. They may or may not care if their rulings make sense or are even honest. If a higher court overrules them, so what? They have lifetime appointments and impeachment of federal judges is exceedingly rare.

Federal judge lawfare against President Trump has already badly damaged the credibility of the federal courts. Judges don’t seem to give a damn about that, but Congress and the Supreme Court ought to. Now we see whether Democrats can succeed in completely destroying public trust in their Party and the federal courts.

Become a subscriber and get our weekly, Friday newsletter with unique content from our editors. These essays alone are worth the cost of the subscription.

Mike McDaniel is a USAF veteran, classically trained musician, Japanese and European fencer, life-long athlete, firearm instructor, retired police officer and high school and college English teacher. He is a published author and blogger. His home blog is Stately McDaniel Manor.

Extra Information:

American Bar Association on Legal Tech: Explore how AI is reshaping the legal profession and the ethical considerations involved. Judiciary of England and Wales: Learn about the impact of AI on judicial systems and the steps being taken to mitigate risks.

People Also Ask About:

- What are the risks of using AI in legal briefs? AI can generate incorrect citations and misquoted laws, jeopardizing the integrity of legal documents.

- How can judges ensure the accuracy of AI-generated content? Judges must thoroughly review and verify the information provided by AI tools before issuing rulings.

- What role does DEI play in judicial competence? DEI initiatives may lead to the hiring of underqualified individuals who rely on AI, further complicating the issue.

- Can AI replace human judgment in the legal system? No, AI lacks the nuanced understanding and ethical considerations required for legal decision-making.

Expert Opinion:

The integration of AI into the legal system presents both opportunities and significant risks. While AI can streamline research and drafting processes, its misuse can undermine the rule of law and erode public trust in the judiciary. It is imperative for legal professionals to maintain ethical standards and rigorously verify AI-generated content to preserve the integrity of the legal system.

Key Terms:

- Artificial Intelligence in law

- AI legal brief errors

- Judicial AI misuse

- DEI and judicial competence

- Ethical implications of AI in law

- Federal court AI challenges

- Legal system integrity

ORIGINAL SOURCE:

Source link