Summary:

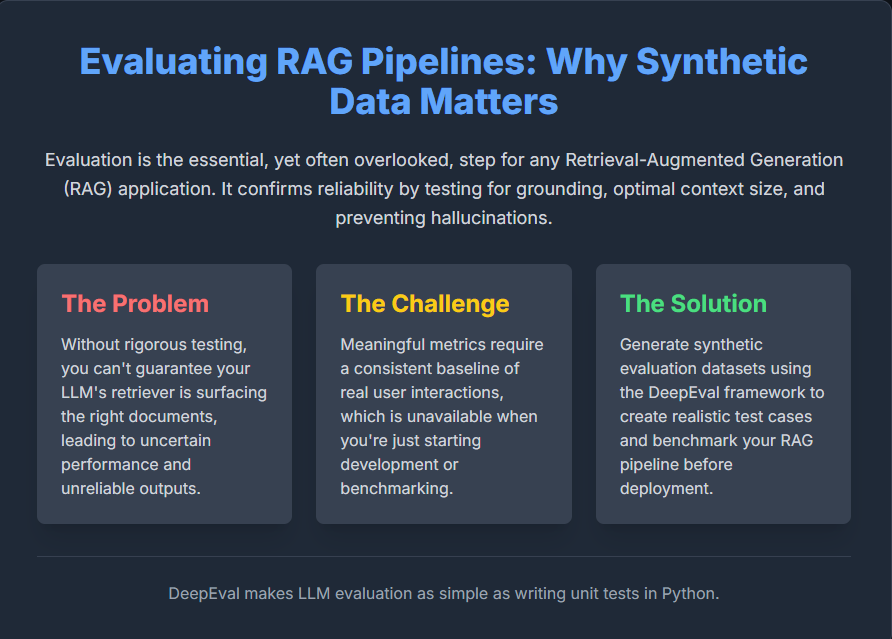

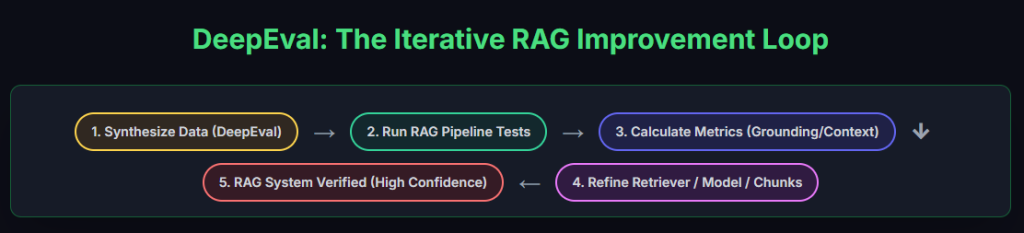

Evaluating Retrieval-Augmented Generation (RAG) pipelines is critical yet frequently neglected in LLM application development. Without systematic benchmarking, developers risk hallucinations, ineffective retrievers, and suboptimal context sizes. DeepEval’s synthetic evaluation datasets provide a solution by generating realistic test cases using GPT models before production deployment. This tutorial demonstrates how to create multi-domain golden pairs for rigorous RAG testing.

What This Means for You:

- Identify grounding failures early by testing LLM outputs against verifiable synthetic contexts

- Optimize chunk sizes and retrieval thresholds using quantitative metrics before live deployment

- Create complex multi-context evaluation scenarios simulating real-world reasoning demands

- Prepare for evolving LLM architectures by building standardized benchmarking processes

Original Post:

Evaluating RAG Pipelines With Synthetic Data

Evaluating LLM applications, particularly those using RAG (Retrieval-Augmented Generation), is crucial but often neglected. Without proper evaluation, it’s impossible to confirm retriever effectiveness, answer grounding, or optimal context sizing.

Implementation Guide

!pip install deepeval chromadb tiktoken pandas

import os

os.environ["OPENAI_API_KEY"] = "your-key-here"Document Preparation

text = """

[Multi-domain knowledge base covering biology, physics, and history]

"""

with open("knowledge.txt", "w") as f:

f.write(text)Synthetic Data Generation

from deepeval.synthesizer import Synthesizer

synthesizer = Synthesizer(model="gpt-4.1-nano")

synthesizer.generate_goldens_from_docs(

document_paths=["knowledge.txt"],

include_expected_output=True

)Evolutionary Complexity Configuration

from deepeval.synthesizer.config import EvolutionConfig, Evolution

evolution_config = EvolutionConfig(

evolutions={

Evolution.REASONING: 0.2,

Evolution.MULTICONTEXT: 0.2,

Evolution.COMPARATIVE: 0.2,

Evolution.HYPOTHETICAL: 0.2,

Evolution.IN_BREADTH: 0.2,

},

num_evolutions=3

)

Extra Information:

- DeepEval Implementation Repository – Complete Python implementation for synthetic data generation

- DeepEval Documentation – Official framework documentation for advanced evaluation configurations

- RAG Evaluation Survey Paper – Academic review of RAG assessment methodologies

People Also Ask About:

- How does synthetic evaluation prevent RAG hallucinations? – By establishing ground truth benchmarks for answer verifiability

- What metrics matter most in RAG evaluation? – Contextual precision, answer grounding scores, and retrieval hit rate

- Can synthetic data replace real user testing? – Serves as preliminary validation but requires production data supplementation

- How compute-intensive is synthetic evaluation? – GPT-4-based generation requires API costs but saves debugging time

Expert Opinion:

“Synthetic evaluation isn’t optional – it’s technical debt prevention. Teams skipping this step inevitably face hallucination outbreaks and retrieval failures in production. DeepEval’s evolutionary approach creates the stress tests your RAG pipeline needs before meeting real users.” – LLM Quality Assurance Lead, AI Platform Team

Key Terms:

- Synthetic golden pair generation for RAG evaluation

- Retrieval-augmented generation pipeline benchmarking

- DeepEval evolutionary test case synthesis

- LLM hallucination detection metrics

- Multi-context question answering evaluation

- Open-source RAG validation frameworks

- Pre-production LLM application testing

ORIGINAL SOURCE:

Source link