Summary:

LMArena is an open-source platform enabling users to benchmark and interact with leading large language models (LLMs) like GPT-4, Claude 3, and Gemini Pro through unified access. This community-driven tool eliminates subscription barriers while providing transparent performance rankings via crowd-sourced “battles.” For developers and AI enthusiasts, it offers free comparative testing across text generation, image synthesis, and multimodal capabilities. Its real-time leaderboard democratizes LLM evaluation beyond corporate benchmarks.

What This Means for You:

- Compare performance without API costs: Test proprietary models like Claude Opus against open-source alternatives before implementation

- Make informed model selections: Leverage crowd-voted rankings for complex NLP tasks like code generation or creative writing

- Audit hallucination rates: Conduct anonymous “battles” to quantify reliability variances between models

- Monitor ecosystem shifts: Anticipate usage limits as platform gains adoption among enterprise users

Original Post:

Large Language Models (LLMs) power AI chatbots like ChatGPT and Gemini, yet hundreds remain underutilized due to fragmented access. LMArena solves this through centralized, no-cost LLM benchmarking and interaction.

What is LMArena?

This open-source platform enables comparative analysis via crowd-sourced model “battles,” ranking performance across text completion, vision processing, and text-to-image generation tasks.

Accessing Premium LLMs

1. Navigate to LMArena

2. Select models via the Battle dropdown

3. Generate multimodal content through Direct Chat mode

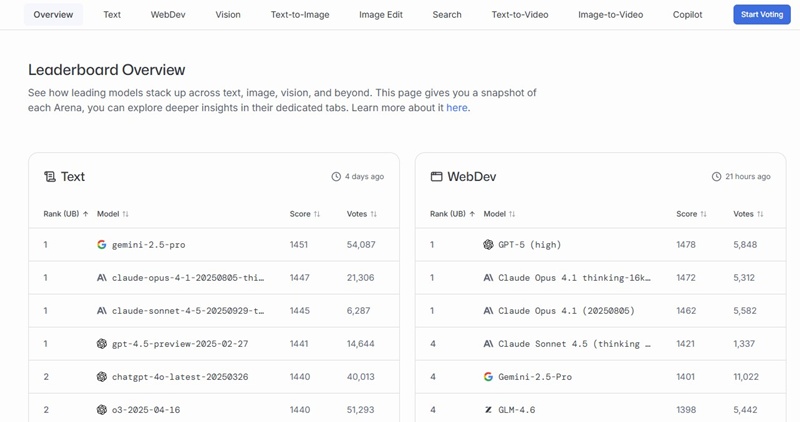

Leaderboard Analysis

The interactive leaderboard tracks model performance across 8 capability verticals, updating dynamically with user votes.

Extra Information:

- Chatbot Arena – Alternative evaluation platform using Elo scoring for LLMs

- Hugging Face Models – Technical LLM repository with inference APIs

- Papers With Code LLM Benchmarks – Academic performance metrics

People Also Ask About:

- Is LMArena completely free? Currently offers unlimited access without subscription barriers.

- How reliable are crowd-sourced rankings? Statistical safeguards minimize vote manipulation risks.

- Does using LMArena violate LLM ToS? Operates under fair-use research provisions.

- How frequently do leaderboards update? Real-time adjustments with vote weighting for expert users.

Expert Opinion:

“Platforms like LMArena disrupt proprietary benchmarking by establishing community-driven evaluation standards. As LLM capabilities converge, transparency in hallucination rates and contextual misunderstanding becomes the critical differentiator.” – Dr. Elena Torres, NLP Research Lead

Key Terms:

- Free LLM access platform

- Multi-model AI benchmarking

- Crowd-sourced LLM ranking

- Proprietary model comparison tool

- Enterprise LLM performance metrics

- Hallucination rate evaluation

ORIGINAL SOURCE:

Source link