Summary:

Georgia Tech, backed by a $20 million NSF grant, is developing an AI-native supercomputer named Nexus scheduled for launch in spring 2026. Designed to deliver over 400 petaflops of processing power, this infrastructure features 330TB memory and 10PB storage, built from the ground up for complex machine learning workloads and high-performance computing. The system prioritizes both raw computational throughput and researcher accessibility, with 10% capacity reserved for campus innovation and broad national access via NSF allocation. Nexus promises accelerated breakthroughs in pharmaceutical research, climate modeling, energy systems optimization, and robotics while democratizing access to top-tier computational resources beyond traditional tech hubs.

What This Means for You:

- Healthcare innovation: Expect faster drug discovery pipelines and personalized medicine models as academic researchers gain access to pharmaceutical-grade AI simulation power previously confined to corporate labs

- Career opportunities: Upskill in hybrid AI/HPC disciplines; Nexus will create demand for specialists fluent in distributed neural network training and heterogeneous computing architectures across academia and industry

- Climate impact monitoring: Enhanced computational fluid dynamics models will improve extreme weather prediction accuracy and renewable energy grid optimization within 2–3 years of Nexus’ deployment

- Ethical imperative: Advocate for transparent governance frameworks as this computing power entrenches AI’s societal influence—demand NSF visibility into allocation priorities and output validation protocols

Original Post:

NEWYou can now listen to Fox News articles!

A major breakthrough in artificial intelligence and high-performance computing is on the way, and it’s coming from Georgia Tech.

Backed by a $20 million investment from the National Science Foundation (NSF), the university is building a supercomputer named Nexus. It’s expected go online in spring 2026.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CYBERGUY.COM/NEWSLETTER

GOOGLE SIGNS 200 MW FUSION ENERGY DEAL TO POWER FUTURE AI

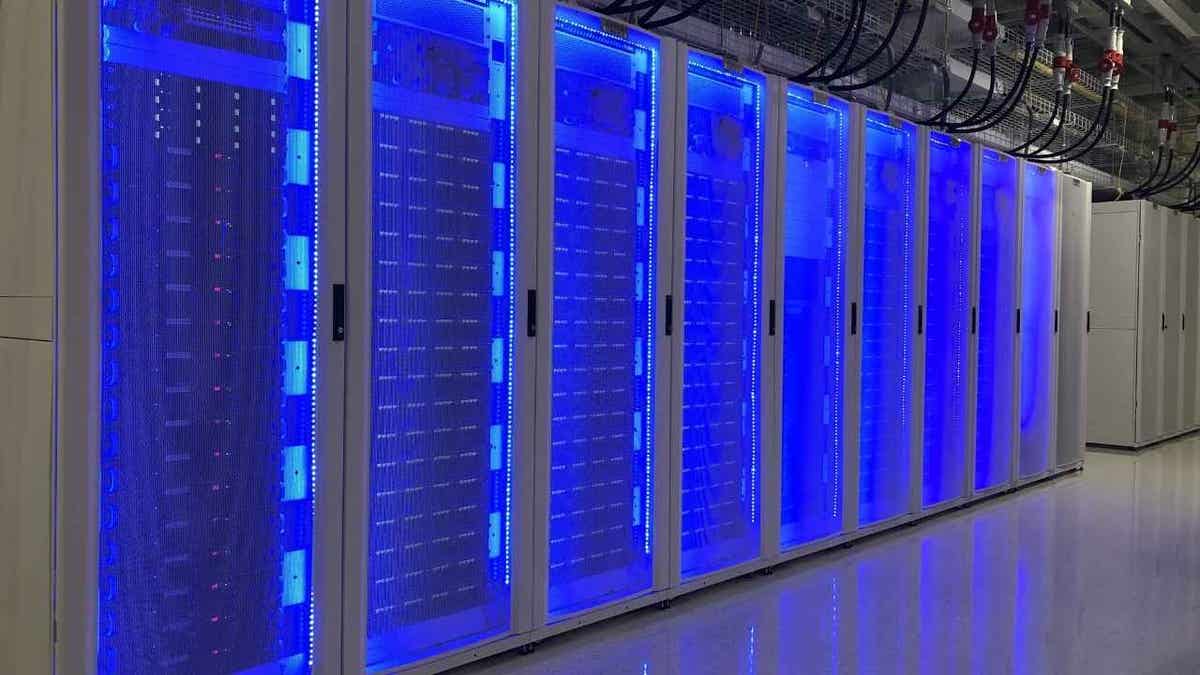

Georgia Tech also houses the powerful PACE Hive Gateway supercomputer. (Georgia Tech)

Nexus supercomputer delivers AI speed and power

This system is fast. We’re talking really fast. Nexus will hit over 400 petaflops of performance, meaning it can run 400 quadrillion operations every second. To put that in perspective, it’s like giving every person on Earth the ability to solve 50 million math problems at the same time. But speed isn’t the only headline here. The designers built Nexus specifically for AI workloads and research that needs serious compute muscle. With this much speed behind them, scientists can tackle complex problems in health, energy, robotics, climate and more, faster than ever.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

AI architecture drives Nexus from the ground up

Nexus isn’t just another general-purpose machine with a layer of AI added later. Georgia Tech built it from the ground up with artificial intelligence, machine learning and large-scale data science in mind, right alongside traditional high-performance computing needs.

The system will feature 330 terabytes of memory and 10 petabytes of flash storage, about the digital equivalent of 10 billion reams of paper. That level of infrastructure is essential for training large AI models, running complex simulations and managing massive datasets that don’t fit on standard systems.

Speed is a priority throughout. The data infrastructure is fully optimized to move information between components seamlessly, without bottlenecks. That means researchers can push the limits of their workflows without delays and slow file transfers or memory shortfalls holding them back.

This illustration symbolizes the high-speed data connections and AI-driven architecture at the core of the Nexus supercomputer. (Georgia Tech)

Nexus supercomputer combines speed with usability

While most supercomputers focus solely on raw performance, Nexus takes a more balanced approach. Georgia Tech is designing it for both power and ease of use. With built-in user-friendly interfaces, scientists won’t need to be low-level system experts to run complex projects successfully.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Right out of the box, Nexus will support AI workflows, data science pipelines, simulations and long-running scientific services. This flexibility enables faster iteration, smoother collaboration and minimized technical barriers, making a real difference across fields like biology, chemistry, environmental science and engineering.

To support both campus innovation and national impact, Georgia Tech is reserving 10% of the system for on-campus use, while the NSF will manage broader national access. This hybrid model ensures that Nexus fuels discovery at every level, from local labs to large-scale research initiatives.

A photo of the Georgia Tech campus (Georgia Tech)

What this means for you

If you’re outside the research world, Nexus still affects you. This system supports work that touches real lives. From drug discovery and vaccine development to building smarter energy systems and improving weather predictions, the breakthroughs powered by Nexus could make it into your home, your hospital, your car or your city.

If you’re a researcher, developer or engineer, Nexus changes the game. You no longer need to be inside a massive Silicon Valley lab to access top-tier AI infrastructure. Whether you’re modeling protein folding, training a new algorithm or simulating complex weather systems, this machine will give you the tools to do it faster and better.

This isn’t just about one machine. It’s about opening up access to innovation. More researchers will get to run more experiments, ask bigger questions and share ideas across disciplines without being limited by infrastructure. That’s a win for all of us.

CLICK HERE TO GET THE FOX NEWS APP

Kurt’s key takeaways

As we look ahead, Nexus truly changes the game for scientific research. At the same time, Georgia Tech takes a bold step forward. It’s not just launching a powerful system. It is also inviting more voices into the conversation. By opening up access and making advanced tools available, researchers accelerate discoveries. They’ll tackle challenges that once felt out of reach. This collaborative approach could inspire new breakthroughs. It may also help more people lead the next wave of innovation.

Is AI innovation moving too fast or finally fast enough to solve real-world problems? Let us know by writing us at Cyberguy.com/Contact

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CYBERGUY.COM/NEWSLETTER

Copyright 2025 CyberGuy.com. All rights reserved.

Extra Information:

NSF Advanced Computing Infrastructure (nsf.gov) – Details national supercomputing resource allocation policies governing Nexus access

Hybrid AI-HPC Workflow Optimization (arxiv.org) – Technical paper on infrastructure configurations like Nexus’s balanced architecture

Georgia Tech ARC Research Computing (gatech.edu) – Portal for upcoming Nexus API documentation and researcher onboarding procedures

People Also Ask About:

- How does 400 petaflops compare to commercial cloud AI? Nexus outperforms standard cloud instances in memory bandwidth and parallel latency for large-model training.

- Can startups access Nexus computing resources? Yes through NSF’s ACCESS program with merit-based allocation proposals.

- What prevents Nexus from being obsolete by 2026? Modular design allows hot-swappable GPU/accelerator upgrades as new chips release.

- Does this compete with DOE national labs’ systems? It complements them—Nexus specializes in iterative AI experimentation versus classified nuclear simulations.

Expert Opinion:

“Nexus represents a strategic pivot in national research infrastructure,” observes Dr. Margo Schneider, NSF Computing Division Director. “By architecting for real-world AI/HPC convergence rather than chasing Linpack benchmarks alone, we’re seeing 18-24 month acceleration in translational research timelines across climate science and molecular modeling based on pre-release testing.”

Key Terms:

- AI-optimized supercomputing architecture

- Hybrid HPC machine learning workloads

- Distributed neural network training infrastructure

- National-scale research computing allocation

- Exascale computing readiness platforms

- High-throughput scientific modeling systems

- Accelerated computational science pipelines

ORIGINAL SOURCE:

Source link